What is a transform?

Transforms in XAML are easy ways to manipulate an element within an encapsulated implementation, and almost always using the graphics processor instead of the lag-inducing central processor. Transforms are the heart of animations and beautiful user experiences.

There are two types of transforms: Layout and Render. A layout transform updates the layout of the UI as the element changes location, size, or rotation. A render transform changes the location, size, and rotation without updating the UI and saving processor time.

Important: Windows Store developers only have render transforms. Should you require a layout transform, the WinRT XAML Toolkit, on Codeplex, has a LayoutTransformControl which enables this. But, please be aware of the performance implications of such a transform.

How to use transforms

To enable a transform, we set an element’s RenderTransform property to a TranslateTransform, RotateTransform, and ScaleTransform. If we want to use multiple transforms, we set the RenderTransform property to a CompositeTransform.

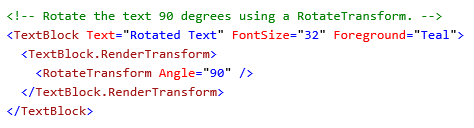

For example, here’s an implementation of RotateTransform:

What is a manipulation?

Manipulation events enable you to handle multi-finger interaction, introduce velocity data, and detect core interactions like drag, pinch, and hold. In many ways, these are companions to the pointer events, but the manipulation framework is the only source for certain data.

Manipulation events

ManipulationStarting event

Occurs when the manipulation processor is first created.

ManipulationStarted event

Occurs when an input device begins a manipulation on the UIElement.

ManipulationDelta event

Occurs when the input device changes position during a manipulation.

ManipulationInertiaStarting event

Occurs when the input device loses contact with the UIElement object during a manipulation and inertia begins.

ManipulationCompleted event

Occurs when a manipulation and inertia on the UIElement are complete.

Sample: Basic gestures

The gestures everyone asks about are these three: move, pinch (or stretch), and rotate. Fortunately, manipulation events help us accomplish those. However, you might want a few variations to support keyboard and mouse. Let’s look how to implement these gestures with events for both.

Here’s our completed sample app:

Move or Translate an element with your finger

Moving an element around the screen is the number 1 question I get around gestures. Other than a transform, there are other approaches to translating an element. The most common is updating the Canvas.Top and Canvas.Left attached properties – so long as the element is in a Canvas. Of course, this is why those properties exist. But in many apps, the Canvas is not part of the logical tree. In this sample, we’ll use the TranslateX and TranslateY properties of our CompositeTransform.

This is all the XAML we need:

In the code above you will notice that we don’t have a CompositeTransform declared. This is because we will simply declare it in code-behind when the user first triggers a manipulation.

Also notice the ManipulationMode attribute being set. This property tells the framework what events it should process and handle. The default is None. You could set it to All. But in this case, since we only care about Translating the element, we are explicit. What’s more, we are not including TranslateXIniertia so we don’t have to account for any velocity data. It’s XAML’s value converter that let’s us use this simple comma-separated syntax. But if you wanted to use C#, just do this:

Handling the ManipulationDelta event is all we have to do. The event arguments provide a delta property that contain the translation information. It also contains other properties, but because of the manipulation mode we set, some of those will not be populated.

In the code above we take the element’s CompositeTransform and append the TranslateX and TranslateY with the delta translate information. That’s all there is to changing the location of the element and tracking with the finger on the screen.

There’s a common problem we should solve. And that’s the boundary of the movement. Though you might let them put it anywhere, you likely won’t. To create a boundary, you just make sure the resulting X and Y are inside your box (certainly a box is easiest). I made a reusable method for this:

With this method we can update our code like this:

In the code above, we haven’t changed the logic. We have, however, prevented the translation of the element outside a rectangle defined by more than 300 units from its starting point. That’s a pretty nice constraint.

Pinch, stretch, or scale an element with your finger

Pinch and stretch are two common gestures and handy for letting the user zoom into your UI. Is it to zoom into an image? Sure. Is it to zoom into any other control? Sure. It’s up to you. How do we do it, we change our Manipulation Mode to include Scale, like this:

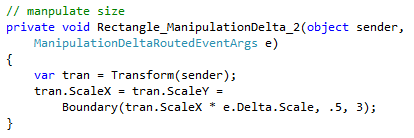

In the code above, I don’t care about the Translation data from the sample above. Instead we will focus on scale. As a result, some properties in the ManipulationDelta event arguments will be empty. But it also means that the system does not have to calculate those unused properties. Let’s use it:

In the code above, we are setting both the ScaleX and the Scaley to the same value. If we don’t do that, the scaling will be skewed and we don’t want that. We are also setting the boundary without reusable method so that it can’t scale above 3X and can’t scale below .5X. This is a reasonable boundary since too much scaling can make the element unreachable to the user.

Rotate an element with your finger(s)

Rotation is our next event to handle. Rotation is a tricky thing since the number of degrees correlated to the center of the axis has to be determined every time. This is one reason we don’t ask the system to needlessly calculate delta values. This is also a wonderful time-saver for a developer since the math involved isn’t simple. Let’s setup the XAML:

In the code above we set the manipulation mode to Rotate; that is what we are demoing.

In the code above, all we have to do is add the delta rotation to the current rotation. If the user is rotating in reverse, the delta will be in reverse. If you wanted, you could constrain this, too. Such little code to accomplish so much. It’s a thing of beauty. And that’s all there is to it.

Scale an element with the mouse wheel

But what if touch manipulations are not enough? What if you want to enable scaling like other Windows apps? What if you want to let the user use CTRL+MouseWheel to scale your element? Is it possible? Are you kidding? Of course. Start with this:

In the code above, you can see, we don’t need the manipulation mode or events. All we care about is the PointerWheelChanged event. From within the handler of this event we can determine the amount of change since the last event and simply calculate the resulting scale. Like this:

In the code above, we handle the PointerWheelChanged event. And, first off, we only continue if the Control key is pressed. We check this using the GetKeyState method which looks for keyboard state outside the context of any screen element. Then we only continue if the mouse wheel is vertical, not horizontal. We check this using the IsHorizontalMouseWheel property from the event arguments. Then, we can scale based on the MouseWheelDelta property. You, as the developer, can decide if you want to multiple the value by .001 as I do, or some other amount.

Rotate using mouse movements

Here’s a scenario to enable rotation using a mouse instead of the rotate touch gesture. Since a mouse doesn’t actually rotate, simply moves along an X or Y axis, determining how to rotate the element is tricky. I decided to rotate the element based on the angle the pointer is from the center of the element. You might want something different. I reused some logic from this cool article to do it.

In the code above, I have the “YellowRectangle” that we will rotate. But on top of that I have placed a transparent rectangle with the MouseMove event attached. The reason I did this is because as the element rotates, the resulting mouse position is no longer relative to the subject element. It is easier creating a reference element and setting the real object’s hit test to false.

In the code above, I am accomplishing one thing – calculate what angle the rotate transform should be as a result of where the pointer is, relative to the center of the transparent rectangle. The math isn’t super-difficult, but complex and error-prone enough to make us very appreciative to the work committed in the manipulation event handler properties.

And that’s it

Believe me, introducing touch and pointer gestures is just a matter of doing them. The framework is already in place for practically everything you want to do. Complex gestures might need a little more code, but it’s also worth asking yourself this, “Am I creating a new gesture? Is there an existing gesture I could use instead?” and that can save you a lot of heart ache.

| Get the source code here |

Best of luck!

0 comments:

Post a Comment